Anyone who has ever tried to ride a bicycle up a very steep hill knows how hard it can be. If you...

Philosophy and Tecnology

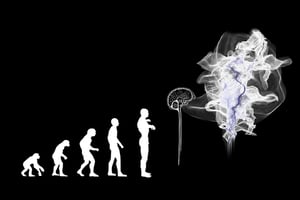

In a world where technology is more powerful than ever, we need frameworks through which to view the research and development we conduct. Technology carries both bright hope and dark possibilities for the future, and it is up to us as individuals and as a society to decide which path we want to take. In this blog post, we will explore the bright and dark possibilities of technology and why philosophy is needed now more than ever to make informed decisions about our future.

In August of 1945, the US dropped atomic bombs on two Japanese cities, immediately killing an estimated 130,000 people and tens of thousands more dying from subsequent radiation poisoning. Then-president Harry S. Truman called nuclear weapons the "greatest destructive force in history."

The discovery of nuclear power was a dire moment in world history. It was the first major, duel use technology that delivered the ability to destroy the world and the ability to deliver near-unlimited clean energy. It was a technology that nearly balanced the scale between its ability to deliver a vital benefit to humanity or utterly destroy it.

As scientists searched for new knowledge, they delved into chemistry and biology. Other duel use technologies came to light ushering in miracles in medicine, materials, food production, and thousands of other valuable advances while at the same time ushering in the age of catastrophic chemical and biological weapons the like of which the world had never known. Nuclear, biological, and chemical (NBC) weapons described the weapons of mass destruction that threatened humanity for more than half a century.

NBC was the beginning of the idea of Mutually Assured Destruction (MAD). The basis of MAD is that by assuring the destruction of one another, no two rational actors will ever fight. They must avoid direct conflict. There is no other choice. A major conflict is an existential threat to not just the two countries but to the entire human race.

As fragile of a mechanism for peace as MAD may have been, it worked because weapons of mass destruction were only available to large states who recognized their inability to win a conflict without experiencing self-destruction. For more than 50 years, MAD helped prevent widespread conflict between major powers. But the efficacy of the MAD doctrine may have come to an end.

What happens when a weapon of mass destruction no longer requires vast resources and can be distributed worldwide with the push of a button?

It seems like a far-fetched idea, but weapons have moved beyond NBC into information-based weapons that can be created with relatively few resources. How to build and use these weapons can be distributed with the push of a button and require relatively few resources to create.

In 2000, Bill Joy, the lead scientist of Sun Microsystems, published a groundbreaking article postulating the formation of a new generation of weapons of mass destruction. The article, Why The Future Doesn't Need Us, postulated that robotics, genetic engineering, and nanotech were the next generation of weapons of mass destruction. He postulated several dystopian scenarios where these technologies led to human extinction events.

His article stirred real controversy among scientists and politicians at the time, but it was mostly dismissed as naive alarmism. No one believed these technologies could be developed into significant weapons. They were too undeveloped, and the idea of a non-state actor being able to develop a weapon with the potential to cause widespread harm was so far-fetched to the point of being unthinkable.

Then the COVID-19 pandemic hit. At first, the mainstream media and politicians dismissed the idea that the virus was created in a lab, until in November of 2021, the NIH confirmed it funded gain of function research on bats in Wuhan, China, using the exact virus the world was battling. So far, conservatively, more than six million people worldwide have died from the virus.

The main point is not to argue whether the lab accidentally or intentionally released the virus, we may never know the truth, but the main issue is it was done for only a few million dollars in a tiny facility. There are many facilities around the world that are much larger and more sophisticated than the Wuhan lab. Any of them could be turned into the starting point of a new genetically engineered weapon.

Joy's fear is turning out to be more prescient than anyone knew. Whether an extinction-level event is purposeful or accidental won't matter if billions of people die. He was right about the danger of genetically engineered weapons, but what about robotics (artificial intelligence) and nanotechnologies?

The development of both artificial intelligence and nanotechnologies seems to be following the path Joy most feared. Artificial intelligence is improving at a breathtaking pace, and the pace seems to be accelerating. All signs are pointing toward a machine passing the Turing test within the next ten to twenty years.

Nanotechnology is developing at a much slower rate, but headway is being made. Scientists are already creating tiny "machines" that they hope will one day be injected to intervene in certain diseases. They are exploring the building blocks of matter to understand how to create new moving structures that can do wondrous things.

New technology is not just creating additional existential threats. And it is creating new moral and ethical issues.

For example, we are on the verge of self-driving cars completely disrupting the transportation industry. Millions of workers will be displaced. Many of these workers will be retrained, but how will societies cope with those who are not able or willing to re-start their careers?

What will happen when self-driving vehicles are faced with ethical issues? For instance, in the event of an impending accident, a computer-driven car will be able to make near-instantaneous decisions. What if it has to choose between two different people dying or being seriously injured in the accident? Who does it protect first, its passengers or an innocent bystander?

It is an ethical dilemma, but there is an added layer. The team programming the car will make the decision in the algorithm of the car who will live and who will die.

Once a technology is available on the internet, the proverbial "demon" is unleashed. There is no taking it back.

The pursuit of knowledge in and of itself is not immoral, but the use of that knowledge could lead to catastrophe.

So, what is to be done?

We need a worldwide set of accepted, immutable values. An international acceptance of the right to life for all people would be helpful.

Why recognize immutable values?

Values change as human societies change, and not always for the better. Many societies accepted human sacrifice as an acceptable and necessary practice in ancient times. It was just as evil then as it would be if the practice was revived today.

In 1945, while America fought to prevent the spread of Nazism, 10-13% of Americans supported the complete extermination of the Japanese as a race, and the Allied Postdam conference recognized that with nuclear weapons, the "complete destruction" of a nation and people was in the power of the United States.

It was a terrifying prospect for the world. If cooler minds had not prevailed, the death toll in Japan could very well have been much, much higher. What if Truman had decided to carpet bomb Japan with nuclear weapons? It was not a far-fetched idea.

If scientists, engineers, politicians, and the mainstream public depended on their understanding of philosophy and universal values, it might help the human race from embracing disaster.

As we continue to develop new technologies, it is vital that scientists consider the philosophical and ethical ramifications of their research. We must ask ourselves what implications these new technologies may have for humanity and our planet. I'd love to hear your thoughts on this topic. Please leave me a comment below.